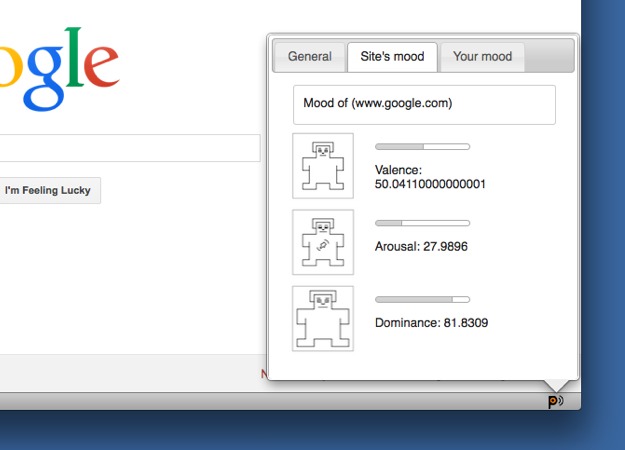

Panemoticon (2012), created in collaboration with John Priestley, is a Firefox add-on that tracks the user’s mouse movements, analyses those data to gauge the user’s mood, and maps that information to create generative music. Affect is mapped onto three dimensions: Valence (positive or negative affect), Dominance (perception of one’s degree of control over self and environment) and Arousal. For visualization, the Self-Assessment Manikin (SAM) is used, which was developed by Bradley & Lang in the 1980‘s as a pictorial assessment technique to directly report affective responses.

Already existing research based on the idea that users’ affective states can be determined by tracking the most primitive means of interaction on the Web, without the users logging in into any website or volunteering any information, has broad privacy implications. Our intervention is not a mere application of research, but rather a preemptive critical engagement and multivalent elucidation of its dystopic potential.

![]()

Following are the options for the user to listen to the generated music:

Personal Mode: In this mode the add-on plays music based on the user’s current mood. Mouse movements are tracked and analyzed, and the calculated mood data (valence, arousal, dominance values) are used to generate music. This mode is offline; there is no network communication.

Collective Mode: In this mode the add-on plays sounds based on aggregate response to the currently displayed website. The user’s mouse movements are tracked and analyzed, and the calculated mood data (valence, arousal, dominance values) are sent to the server to determine the overall mood of the site.

Sleep Mode: The add-on is practically disabled. There is no sound, no network communication, and no mouse tracking.

Panemoticon is a 2012 commission of New Radio and Performing Arts, Inc. for turbulence.org. It was made possible with funding from the National Endowment for the Arts.